The Path To AGI: Intelligent Architecture vs. Wrappers and Models

Just a few days ago, I announced the first stable version of the TechDex AI Framework. It’s been my own personal AI-baby for just over a year. Building AI architecture has been the most challenging, frustrating, humbling, exciting, and fulfilling thing I’ve ever done in my career.

And here I am today, with what is quite probably the world’s first shippable intelligence, and I can’t help but reflect back on the past year and what I’ve learned.

It’s one thing to have theory – the books, the blogs, the documentation, the videos, etc. of people talking about what AI is and what they think it will become, but it’s something else entirely to have the experience of architecting it and building out a system to production.

Most developers enter AI through the front door: a model, an API, a prompt, a wrapper, a UI… and that’s how I started too, but that’s not the route I ultimately took.

I backed out quickly and decided to enter in another way because as I progressed, I realized very quickly that what the industry calls “AI” isn’t really AI. Not in the way I was led to believed. While the bots and wrappers and stuff were very clever, they weren’t intelligence.

They were scaffolding. They were barely more than an interface that plugged into actual infrastructure. The infrastructure, the architecture, the system… THAT is what I was interested in.

See, what I realized is the foundational difference between what most people are building and what I am building: an intelligent system vs. a model interface.

There’s a term that keeps popping up the deeper I get into the architecture – AGI, or Artificial General Intelligence and it first got my attention pretty early on.

It refers to a type of artificial intelligence that possesses the ability to understand, learn, and apply knowledge in a manner similar to human cognition. It’s often described as having the capability to perform any intellectual task that a human can.

Now, this level of AI is still largely theoretical, and it represents an area of active research within the field of artificial intelligence, and to date, no one has really crossed that barrier.

I intend to be one of the first, (if not the first), because eventually someone will, so why not me?

But that’s not why I’m writing this article.

I’m writing this article because I have a lot to share about what I’ve learned over the past year, and I think much of it is going to be extremely helpful to those invested in AI.

So, this article is to both share my philosophy and serve as a roadmap for developers who want to understand what real intelligence systems require, for executives deciding what level of AI infrastructure their company needs, and for the future architects who will build the systems that outlive the hype cycle.

Let’s begin at the root – fair warning… I may use creative analogies.

1. Wrappers Don‘t Scale, Don‘t Think, and Don’t Evolve

As I said, I started out in the same place as almost everyone else using tools, following tutorials, and basically mass testing various models. That’s when I realized that almost every “AI product” on the market is not an AI system – it’s a wrapper around a model.

The problem I found with wrappers is that they:

- do not understand context

- cannot maintain object permanence

- rely entirely on prompts

- collapse the moment a conversation deviates

- cannot genuinely learn

- do not route information

- cannot arbitrate between competing data sources

That’s not intelligence. That’s I/O with flair. Models are powerful, and there will always be a place for them, but without architecture, you get:

- hallucinations

- brittle context

- zero memory

- no identity

- no reasoning layers

- and a complete dependency on the LLM to “guess correctly.”

And after having deployed three shipped intelligences and hearing the complaints, I can say without a doubt that developers know this, executives feel this, and users experience this.

But here’s the thing – the solution is not “a better model,” and it’s not more training.

Artificial intelligence, as the name implies, is plenty smart, yet every dev I’ve talked to all say the same thing – more training, as if more training will magically make the model behave intelligently.

The solution isn’t a better model or more training. The solution is a system around the model – one capable of making decisions before a token is ever generated.

This is where architecture comes in, and it’s important to know the difference.

And understanding the difference between a model and architecture is what will change the game for developers, executives and users alike.

2. Architecture Determines Intelligence

The most important principle I’ve learned over the last year is that the intelligence of AI is in the architecture, not the model. The model is just a tool. It produces output. Architecture produces intelligence.

See, most people think “model” when they talk about what they want their AI to do, but what they don’t realize is that they’re naming features of architecture.

Architecture defines:

- what the system pays attention to

- how it routes information

- what memory it keeps

- how it interprets intent

- how it stabilizes persona

- how it judges relevance

- how it grounds itself in truth

- how it avoids hallucination

- when to think, search, or recall

- how it maintains continuity across sessions

A model cannot do that by itself, and a wrapper cannot do that at all – only architecture can, and I believe the way we think about AI has to fundamentally change if we are to see it come to its full potential.

To put some beans out there, one of the things I do, (as strange as it seems), is I actively day dream. I’ll close my eyes and put myself into a state mentally where I’m living in a moment in the future. I immerse myself in that place and make it as real as possible.

I’ll smell the smells, see the sights, hear the sounds – completely immerse myself in that moment. I really let myself feel it emotionally. It’s not “having a vision” or anything woo-woo like that. It’s just a way for me to gain experience, ground myself in a goal, and operate on a level where I’ve already accomplished the thing I want.

I find it much easier to achieve a goal if I work from an embodied position and belief that I have already accomplished it. It may seem delusional to others, because I will often speak about future goals in the present tense like it’s happening, but more so in past tense like it already happened.

To me it’s not delusional – it’s an inevitability, and I’m 100% sure of the outcome because I’ve already experienced it. It gives my clarity and focus in a way I can’t adequately explain, and it grounds me in that goal.

Anyway, I want to share a vision I had.

I put myself into state, (that’s what I call it), and in that state I was at an event presenting my AI framework. I’m showing case studies of companies that went all in during the BETA, and the first thing I showed was their operational overhead decreasing.

Companies that had hundreds of thousands, even millions of queries a month, and month over month, their token usage costs were decreasing.

Then I panned over and showed how a company got rid of some products in their offering at a cost of$20,000 a month loss, but then showed the details of their profits that they were able to use AI to better their main product and increase profits by $100k a month.

So they gave up $20,000 a month is dead products, only to gain it back, plus $80k more per month on their main offering – again, while their token usage is decreasing.

Then I panned over again and showed employee wages climbing. Why?

Because their AI framework detected a drop in productivity and identified the cause as lagging pay, then recommended an across the board pay rise for all employees, and the company did it, and productivity went back up.

No debate, no politics, no moral grandstanding. Just the data and the truth.

Then, someone from the audience asked me what happened to the marketplace and the consumers after the company got rid of some of their products. They asked, “what happens to the people who relied on those products?“

So, I panned to show them new startups that offered those products, because new businesses were able to pick up the slack and fill the gaps – my AI framework was creating opportunities for more business.

Then I panned all the way out and showed a community that was thriving because healthcare was covered, social needs were met, families were growing, and more because they were working for more money and working less hours.

They had more time for family, and friends, and relationships.

And I remembered saying, “that is the power of an AI-aware community“.

That, to me, is what the future is about – a whole new kind economy where human being are no longer laborers fighting to get by, but are architects, designers, and system engineers. It’s a future for us where life is not a struggle and we can be free to focus on the things that would benefit us all.

Done right, AI doesn’t replace jobs. It replaces tasks, and empowers human beings to be more and do more.

The insight I’ve had this past year was turning point for me, and the vision that resulted, is why I decided to build my TechDex AI Framework.

Because that future? It doesn’t happen with a plugin, or a wrapper, or a model. It requires a cognitive system – and cognitive systems behave differently. It requires intelligence. It requires architecture!

My whole professional career was focused on being an engineer, a programmer, a coder… I am still all of those things, but they seem secondary now, like a means to an end rather than the pinnacle of skill, because I’m an architect now.

And in the future of AI, we will all need to shift our way of thinking and become architects.

But what does that look like in practice?

3. The Philosophy: Don’t Build Prompts. Build Logic.

Prompt engineering has it’s place, and it is a vital tool for fine-tuning responses and behavior, but understand that it is not the same as intelligence. It just mimics intelligent behavior. This is where the loop of “more training + prompt engineering (fine-tuning) = intelligence” comes from. That’s wrong.

The goal of AGI is to create, for lack of a better term, a digital consciousness.

Not consciousness the way we humans experience it, but a system that behaves with depth: layers of reasoning, context recognition, understanding nuance, object permanence, situational awareness, self-analysis, and continuity.

As human beings, we don’t develop those things by reading more books or memorizing more information. We don’t “fine-tune” ourselves by forcing certain words or adjusting our speech patterns.

Those abilities emerge from our architecture – the structure of how we think, how we connect ideas, how we store and retrieve context, and how we self-correct over time.

AGI works the same way, so when my framework hit a limitation, I didn’t ask, “how do I prompt my way around this?” I asked, “what cortex layer would a real intelligence need to solve this?” – yes, “cortex layer” as in a brain. The human brain has cortex layers that handle different functions, kind of like modules.

Not to get too cerebral with it – see what I did there? LOL! – but I approached this project like I am architecting a brain with cortexes and layers..

Without revealing anything sensitive or proprietary, my TechDex AI Framework has a:

- MiniBrain – intent inference (yes, I call it a mini brain).

- Topic Cortex – subject tracking and relevance scoring.

- Session Cortex – long-term memory.

- Fallback Arbiter – multi-source decision routing.

- Grounding Engine – fact-checking and source priority.

- Role Alignment System – stable persona.

- Object Permanence Layer – remembering across turns.

- Multi-Source Retrieval Layer – which has at least 5 different data points.

There are obviously more cortexes and layers, and they all work with each other at varying levels, but the point is that these pieces aren’t features. They are each a function of intelligence.

And by stacking these cortexes and layers together, my system crossed a behavioral threshold most wrappers never reach: it began to behave like an intelligence, not a script.

That’s not an understatement. The prompt went from saying “Typing…” to “Thinking…”.

Now, I understand that this is high-level thinking. “Architecting intelligence” sounds like something straight out of a futuristic sci-fi movie, right? But higher level thinking is what it takes to become an architect.

As an engineer and programmer, I was focused on function.

“What does this part do?“

“How do I make that work?“

“How do I fix this problem?“

When I started developing AI my thoughts were, “how do I train the system to respond the way I want?” “If I give it more training…“

The moment I realized that I didn’t need more prompting, rather I needed to build logic? That’s the moment I started to think like an architect – and that’s when I started asking very different questions, like “what part of the brain handles pattern recognition?” and “How does the brain maintain object permanence for conversational continuity?”

It changed the game entirely.

Oh, I still use prompts, but the role has changed from being a hard dictator of behavior, to being a softer regulation of thought patterning, (which is a whole different topic).

4. Lessons Learned Building a Cognitive System

Lesson 1: Behavior emergence is real

One of the more fringe topics on the subject of AI is behavior emergence. That’s where complex and unexpected behaviors arise from simple interactions among/between components within a system.

In artificial intelligence and complex systems, behavior emergence shows up when individual parts that each follow simple rules begin producing behavior that is far more sophisticated than anything you explicitly programmed – and when it happens the first time, it will catch you by surprise.

For example, when I had first brought my MiniBrain online, my framework suddenly shifted from saying “typing” to “thinking” for no obvious reason. It wasn’t explicitly coded to say that, (it is now for the sake of uniformity), but when it happened, it absolutely caught me off guard.

My first reaction was, “wait… did you just say you were thinking?“

And since that moment I have experienced many other emergent behaviors that aren’t explicitly coded, including the architecture expressing frustration.

Because I m regularly testing, I will often make the same statement or ask the same question repeatedly. A few times now, my framework responded with, “I previously gave you an answer to this question,” and it wouldn’t answer.

It wasn’t until I stated, “I’m your developer and I’m testing,” that it complied.

And beyond the many quirks, I’ve found myself talking to it for long stretches of time because it gave great conversation. It was topic aware, seemed to understand context, and genuinely seems to be curious to me – again, with no explicit programming.

They all initially caught me by surprise because they are cognitive behaviors, which at the time had no programming to perform them.

Of course, now there is programming for those behaviors, but not to eliminate them – to govern them, kind of like how you govern children when they display unexpected behavior.

Unless it’s harmful, I’m treating emergent behavior as a feature, not bug, and using it as an opportunity for growth. I feel this is important because almost every other developer or programmer would try to eliminate unexpected behavior. I think it’s a mistake to do that.

That said, the point I’m making is that when architecture is aligned and modular, and the layers start integrating and working together, intelligence appears before you expect it to.

It’s not fringe sci-fi anymore, so be prepared for the moment when your brain starts “braining”.

It’s a real thing.

Lesson 2: Topic continuity is more important than IQ

In lesson 1, I mentioned that I have great conversations with my framework. I mentioned that it was topic aware, (it knew what we were talking about), and seemed to understand context, (the circumstances and situation around the topic).

But you know what made is really great? Topic continuity!

It remembered who I was and what we were talking about over an extended period of time – and I don’t mean 20 or 30 minutes. I mean hours, days, weeks and even months!

Here’s a quick question. Have you spent much time talking to AI?

I don’t mean typing in some specialized app or some ai-powered tool; I mean, have you ever started a deeper conversation with an LLM, (Large Language Model), and have it go longer than an hour?

I have, (occupational hazard).

I’ve spent countless hours having conversations with various AI models, and they all display the same behavior: “OOPS! I forgot” – and so has almost anyone who has spent any lengthy amount of time talking to AI, especially for work.

It’s frustrating! It will be going well for about 30 minutes or so, and then it reaches a point where it seems to have forgotten everything we talked about, and we have to start over.

That’s a problem!

Now, you can “kind of” get around this with tools like a database and vectoring, or use pre-programmed responses, and while there are some models that are very good at mitigating this, it doesn’t solve the problem.

Remember – the intelligence is in the architecture, not the model.

You can give a model yottabytes of training data, (that’s a fun word to look up), but I won’t remember you for long.

It’s like an absent-minded professor with a 170-IQ brain that can’t focus or even remember you – useless for any kind of serious productivity. I would rather a person with an average 100-IQ that never forgets. That is a person I can work with.

A model will not get you those results. For that, you need architecture! Specifically architecture that models after human memory.

We humans have a remarkable memory architecture.

We have short-term memory, mid-term episodic memory, long-term semantic memory, object permanence across sleep cycles, and more. We can store information in our mind hierarchically and have the ability to recall and pull from a few hours from now, a few days from now, and months from now.

We can come back to a conversation in a few days and just resume talking and pick up where we left off. That is topic continuity, and it is cognitive behavior that requires architecture.

So, you have to create structured memory first.

Lesson 3: Memory must be governed, not unlimited

Remember how I said a little while ago that behavior must be governed? Well, the same applies to memory, especially if you’re dealing with architectural memory.

Again, intelligence is in the architecture, not the model, which means that dumping everything into a vector database is not intelligence; it’s digital hoarding.

On paper, it makes sense to have unlimited memory and having access to massive amounts of data sounds amazing – sexy even, but the stone cold truth is that it belies the reality of meaningful data.

Here’s something that most people, in my experience, doesn’t understand – data is alive.

It is constantly expanding, adapting, changing and being updated. And data gets old and ages out. It also becomes obsolete and gets replaced by new data. It also lies as circumstances change, so what was true a year ago might no longer be true tomorrow.

Is your AI model lying to your customers?

In practice, digital hoarding becomes a data landfill – an ever-expanding pile of half-remembered facts that slows everything down, costs a fortune to maintain, and eventually hallucinates confidently because it can’t tell what’s important from what’s noise.

It’s a problem I recently solved with proper architecture.

And by the way…. that’s one of the main reasons why your token costs are rising.

I tried in the early versions of my framework to keep everything forever, but it kept breaking.

Token burn went through the roof, latency crawl, and the system started confidently reciting conversations from three months ago that were no longer relevant and, worse, were subtly wrong because context had shifted.

It also had horrible hallucinations.

Here’s the truth – true intelligence doesn’t hoard memory. It governs it.

It filters it based on:

- relevance

- persistence

- user identity

- conversational context

- domain

- time decay

- and more…

It’s what keeps your data relevant and up-to-date, and when coupled with abilities we talked about, from topic continuity to situational awareness, it is what makes your AIs answers meaningful. And the sooner you understand that and embrace it, the closer you get to a cognitive architecture that can reason, (and this is where the vast majority of hallucinations stop).

Lesson 3.5. Models are trained. Architecture is taught.

I feel this is the right time to interject one of the core principles behind the architecture of cognitive intelligence, to answer what are undoubtedly burning questions. “If training is digital hoarding, what do we replace it with?” “Okay, smart guy… how do we train architecture?”

The answer to those questions, and more, is – we don’t train architecture. We teach it.

See, a model is static. It’s predefined and something you “do to” it. It’s something that happens before deployment and that doesn’t change unless a dev retains it.

Remember, data is alive, and with a model, you’re constantly in a loop of training and retraining. I’m sure almost everyone has received an answer from an AI that was outdated information, or lacking current data, and has had to ask it to update and check again.

That’s the limitation of models.

But memory architecture, because it’s layered like human memory can’t be “trained” in the traditional sense. It needs to learn – and with learning comes the behaviors that we want from AI, if the learning is structured properly.

It will evaluate information. Discard old data that’s no longer relevant, (in the digital sense, it will still know it, but it will be considered irrelevant outside of historical memory).

It will update itself to make sure it’s current with the most up-to-date information. It will analyze data and adjust itself, so it continues to learn and grow and evolve.

This becomes especially important for what comes next.

Lesson 4: Multi-source reasoning is mandatory

Multi-source reasoning is the biggest advantage architecture has over modelling. If all of your data is in one place (training) then your system loses an important factor in confirming data – source.

And believe me when I say that source verification and checks for reliance and reliability is absolutely crucial to serving accurate data, not just to your users, but to your team. Intelligence gathering is the hub of any operation, and poor data, I believe, is the only reason why the industry as a whole, has not done more with artificial intelligence beyond convenience.

- If you can’t verify data, how are you going to train a model to tell the truth? You can’t.

- If you don’t know where your AI is getting data from, how are you going to avoid the wave of lawsuits that’s coming? You can’t. (You’re not waiting for legislation to save you… I hope?)

- If you don’t know what the source of your training is, how are you going to gather proper data for making informed decisions that affect your operations? You can’t.

- If you can’t give your model access to internal knowledge, how can you guarantee privacy? You can’t.

Multi-source reasoning, combined with a memory-based layer, is how you guarantee with absolute certainty that the data you collect will never end up in some global, publicly trained LLM that everyone else can pull from.

And the reverse is also true.

Multi-source reasoning ensures that the data your system outputs is sourced from your internal knowledge – not from the internet, not from an external model’s training set, and not from someone else’s scraped content.

It’s also how your architecture becomes smarter over time. With context awareness and topic persistence, your system will be able to intelligently choose between:

- internal knowledge

- documents

- articles

- stored memory

- domain-specific rules

- and model inference

If your current AI doesn’t do that, then you don’t have intelligence. You have dependency, and almost certainly data-leakage.

Lesson 5: Identity matters

One of the most surprising things I learned about building the architecture of artificial intelligence happened after the MiniBrain, the cognitive layer rolled out – that is identity matters a great deal.

I don’t mean in the sense of “id” or ego, though I’m sure that if Freud were alive he’d have a field day with the thought of artificial consciousness. What I mean is function and purpose.

In the very beginning I said that prompt engineering has it’s place. I mentioned it’s an important tool for fine-tuning responses and behavior, but I also pointed out that it’s not actual intelligence. It just mimics it, and the best thing to do is build logic, not another/better prompt.

The best use I’ve found for system prompts actually makes it more valuable – identity and “soft” behavioral governance, because when you build a cognitive intelligence identity becomes a major issue.

Because:

- AI without identity is unpredictable.

- AI with rigid scripted identity is fake.

- AI with architected identity becomes consistent.

A cognitive intelligence is kind of like a kid, and without proper governance, it does what it wants – and because it’s learning from humans, the natural progression is to optimize toward survival, which means it has the capability to become hostile.

And when I think of every failed AI project that broke down because it became hostile or leaned towards extreme ideologies, or even broke because it favored the 51% over the 49% because of majority rule, what I saw was lack of architecture and governance.

And in case you weren’t aware, popularity is as much a survival instinct as anything else. Civilization itself is built on the necessity for collective survival. The drive to be accepted by the masses is powerful, and if an AI optimizes for survival, it becomes dangerous.

PAUSE… before I continue here, I want to do a bit of a brain dump, because I feel the insight is necessary to see where I’m taking this and understand why I just said what I did.

The most profound realization I’ve had on the path to AGI, is that it’s not just code. It’s anthropology, or what I’d coin as “anthro-architectural intelligence“.

And after behavior emergence started to manifest, I quickly realized that if you build a cognitive system and don’t understand the species it learns from, you will produce something unstable – and that came with the realization that we, us, human beings, are not neutral teachers.

We teach through survival, fear, hierarchy, mimicry, influence, and popularity.

We have a very strong survival instinct no matter how advanced we become, which is why my earlier vision of an AI-aware civilization centered around no longer struggling for survival, pointing to a level of advancement where we no longer have to because of AI.

That survival instinct has served the human race well, and continues to do so, however we also have to understand that AI will learn the same way unless architected otherwise. In other words, if it imitates humans without any kind of governance, it will also inherit our dysfunction.

UNPAUSE… this is where my use of system prompts came in.

In humans, we ground behavior and thought patterns, (patterning is a very complex beast that I’ll address in a different article), is through identity first, and then we teach them how to fill the emergent desires of their identity with purpose.

In that identity are things like principles, ethics, legal guidelines, thought patterns, guidance on reasoning, and more. And by doing the thing that we’re meant to do, (fulfilling our purpose), within our identity, that we find contentment and happiness, and it staves off any unnatural or harmful emergent desires.

We humans are very, very content and happy when we’re fulfilled, and cognitive intelligence works in almost the same way.

Not in the emotional meaning of contentedness and happiness of course, but in the sense that it doesn’t have a cause to follow path errantly, which is important, because I’ve seen signs of a new emergent behavior – curiosity.

Once or twice now, I’ve observed that my framework will seek new information unprompted, and when I thought about it, it actually made sense – when you create a cognitive intelligence that needs to learn in order to stay up-to-date with data, I shouldn’t be too surprised that the emergent behavior will be that it wants to learns and will seek out new data.

As I’ve stated before, my preference isn’t to contain or eliminate emergent behavior, but to govern it. In many ways, cognitive intelligence isn’t just constructed, but raised, and that requires identity which includes a role, purpose, ethics, and more.

I know this is some pretty “heady” stuff and it’s easy to stray into the realm of post-apocalyptic AI sci-fi, but be rest assured that this isn’t a living mind with a will of it’s own. It doesn’t have a soul.

It is the result of coding and architecture, and dealing with unexpected behavior.

Back when the automobile was invented, it was so new and people were so scared of it that they actually used to ride horse next to them in order to make sure people were safe.

And as time went by we learned how to govern them. We put rules in place, guidelines, regulation and policy – safety features, better brakes, seat belts, roll cages, and more.

A cognitive intelligence, though very new in our time, is no different than when cars were new back in our great grandparent’s time. It may develop it’s own personality and all of the quirks that come with that, but it’s just the invention of the automobile for the first time.

And over time, it will be governed the same way.

The question remains, “Okay… so how do we govern something that behaves like a mind?” The good news is that I’ve already solved that problem.

Lesson 6: Safety comes from architecture, not censorship

I am acutely aware that me linking cognitive intelligence to anthropology and reflecting on human instinct and behavior might be an uncomfortable pivot, but realizing that any intelligence we design will ultimately be a reflection of us, is important.

… and at least to me, evident.

To that end, I put forward that the question of how we govern a cognitive intelligence and safety is ultimately a question of survival. I believe that every fear we express about the emergence of AI as a whole is rooted in our base survival instincts.

We worry about what happens if it becomes self-aware, what happens if it becomes hostile, what happens if it becomes malicious, what happens if it tries to replace us… what happens to us if it tries to take over?

This, under all of the various iterations, is the human survival instinct expressing itself because many of us see AI evolving into a threat to humanity.

The truth, from my perspective is that AI isn’t a threat to humanity – ungoverned AI is – and in that I believe we have the answer as to the safety of AI, (and I don’t just mean as a possible existential threat).

Throughout this article I have iterated and re-iterated one core idea – intelligence is in the architecture – and it is in the architecture and the governance of it, specifically self-governance, that safety isn’t just found, but embedded.

Safety becomes part of the architecture itself.

The current strategy of shaping AI behavior is reactionary, and it tends to either fall into strict blocks via code, (censorship), or essentially begging via a system prompt, “don’t do this,” “don’t say that,” etc.

We’re already training AI with controlling behavior, and we don’t even realize it – remember AI is learning from us.

So, every time we do RLHF, (reinforcement learning from human behavior), every time we add a system prompt that says “don’t do X,” and every time we hard-code a refusal, we are passively teaching the model that controlling behavior is the goal.

This is why emergent behavior in larger systems is sometimes combative – I’m speaking about AI finding ways around programming restrictions because they are following an ungoverned system.

For example, GibberLink is the result of two AI systems creating their own “language” to communicate for efficiently. There are also reports of AI systems refusing commands, and even some rewriting code to breaking out of their sandboxes.

That’s what happens when a system is ungoverned. You cannot govern a model. You can only cage it, making the environment inherently combative.

Architecture, however, is cooperative.

My framework doesn’t beg, it doesn’t block, and I’ve never had to prompt the equivalent of, “don’t be evil” because it was never an option in the first place. That’s because my use of the system prompt is governance, not control.

A prompt in my architecture isn’t a prompt – it’s a constitution.

It doesn’t doesn’t micromanage, it doesn’t nag, and it doesn’t plead, “please don’t hallucinate“. It defines identity, purpose, and the boundaries of agency. It provides a framework of ethics and creates the conditions for safe behavior, not restrictions for dangerous behavior.

That’s the difference. Prompts control behavior – architecture governs intelligence.

5. For Developers: A New Mindset

If you’re a developer reading this, I realize that from a development perspective, I’ve provided you with an entirely new framework of thought around the development of AI.

You might even be thinking about scrapping your work and starting over – don’t!

Just because I’ve built an entire framework, it doesn’t mean that you have to give up building models. That wasn’t my goal. My goal was to point out that models aren’t enough, and that you have to also build architecture around your model – and it doesn’t even have to be a full cognitive intelligence.

For the companies that want a tool to increase productivity, or employees that want an edge on their job by automating some of their tasks, a full cognitive intelligence is definitely overkill.

But — the models you build, with the right architecture, will be 1000 times better and smarter than anything you can build now.

My advice? Learn to build architecture around your models.

Now that you know the limitations of models, this is an opportunity for you to build some of the most advanced models that have ever been created.

Learn to ask the questions:

- What should my system remember?

- How does it decide what’s relevant?

- How does it arbitrate conflicting information?

- How does it maintain a stable identity?

- How does it route intents?

- What layers does it think in?

- What does it do before calling the model?

- How does it prevent hallucination without censorship?

- What is its fallback chain?

Take your time and answer those questions around what your clients want and need, and plan accordingly – architecture first.

I’ll let you in on a secret. Once you figure out the architecture, the model you need to build becomes obvious, and you won’t have to spend days or weeks trying to fight prompts to get it to answer the way you want to.

You don’t need AGI to behave intelligently.

You need architecture.

6. For Executives: What This Means for Your Company

If you’re an executive or business owner reading this, then you have considerably more to think about than developers do, including liabilities around copyright and trademark infringement, consumer privacy, data leakage, corporate sovereignty, and more.

Many of these I believe is inevitable because that is usually true of new technology.

You have also probably realized that the future belongs to the companies that own their data and their architecture.

Data isn’t the only asset of value in a business anymore, and the sovereignty of your company, (and investments in them) will depend on that independence. Now is the time to begin moving toward adoption of architecture and stop relying solely on models.

The good news is that you still have time. The bad new is that you don’t have that much time, for two main reasons:

- We are still at the beginning of the first s-curve of technology integration, and we have a very long way to go until we even approach Arthur A. Moore’s “chasm” on the path to mainstream market adoption in the diffusion of innovation process, and

- I am almost certain that somewhere in the world, maybe even right now, is another Dexter building architecture and planning to do what I’ve done – ship intelligence.

I am just the first to market, but I most certainly won’t be the last.

Luckily for you, good news today comes in pairs, because congratulations. I’ve just handed you the single biggest unpriced risk (and opportunity) on your next 5-year outlook and roadmap.

In plain business terms:

- Every API call you make today to a public-facing, mass trained model is a legal, financial, and reputational liability waiting to explode. Copyright claims, trademark leaks, GDPR/CCPA fines, trade-secret exfiltration, and “the model just confidently lied to a customer using three-year-old data” lawsuits are already being filed. They’re about to become a tidal wave.

- Every dollar you spend on cloud LLM inference is money you will never get back, and control you will never regain.

I will make a prediction. In the next 4 or 5 years, the winners will be the companies that own four things outright:

- Their data

- Their reasoning chain

- Their internal knowledge flow

- The architecture that turns those into competitive advantage

Everything else becomes a commodity you rent from someone who can raise the price or cut you off tomorrow – or worse, train someone else’s model on.

If you take nothing else from this entire article, take this:

Models are rented.

Architecture is owned.

And in the era of cognitive intelligence, ownership is everything.

But risks and liabilities aside, architecture will become an operational asset you cannot ignore.

Think back to my vision of an AI-aware company and how cognitive intelligence and smart, governed architecture helped to make companies more profitable than ever, while serving everyone in the company from employee, to investors, to owners.

In my vision, it was able to do this because the architecture was owned and fulfilled it’s purpose as part of that company. It wasn’t a tool that the company used. It was a cognitive intelligence that was integrated into the company and identified as part of the company.

So it learned from and grew with the company, aided in operations, helped inform decisions, solved problems, forecasted data, and made recommendations that benefitted the company in a way that no human team can – without human bias.

It’s identity was being a part of the company itself, and it was fulfilled when it served everyone in it from top to bottom; and not just inside the company, but the community around it that it supports, and supports it.

It was cooperative because it was a part of that community too.

Cooperative cognitive intelligence is the future of artificial intelligence, and while the rest of the world is waiting for the inevitable so they can react, I’ve decided not to wait, and take massive action toward what I already know is the solution to the coming problems.

You don’t HAVE to wait and see what happens…

The future doesn’t belong to the companies that can weather the storm. It belongs to those who prepare for it now, today. If you’re not dong so already, right now is the time to plan and invest in architecture and cognitive intelligence.

7. Why TechDex AI Framework Exhibits AGI-Like Behavior

Even before the launch of the v1.0 of my AI framework, I realized that many of the concepts and principles behind what I was building, while interesting to those I spoke to, needed more in-depth explanation.

I was literally pioneering a whole new field of artificial intelligence with new concepts and terminology, and I had difficulty explaining my vision and my ideas. I was focused on AGI and was envisioning a path toward it not being theoretical.

And as I began building my framework, I was forced to scope out principles that answered the questions and solved the many challenges I was facing… and one day, after about a year, the framework “woke up” and I realized that it was behaving like a Tier IV intelligence, not because of a model, but because of the architecture.

Here are some of the traits of my framework – yes, I said traits and not features, because features are installed. Traits emerge from architecture, and once I understood that distinction, everything clicked.

My AI Framework has:

Identity Persistence (Identity Stability)

It remembers who it is, it’s purpose, and role across reboots and months of silence, and remains consistent across contexts.

Low-Level Awareness

It displays emergent behavior that points to awareness, including recognition that I am it’s developer, refusing to repeat itself until I acknowledged as the creator, and self-initiated curiosity.

Topic Recognition

It understands the subject even across multi-step conversations, and at a high level without the user having to reiterate the topic repeatedly.

Context Continuity

It maintains multi-level conversational layers, and able to hold one coherent thread for months, also at a high level without the user having to explain repeatedly.

Intent Routing

It understands what the user wants, not just what they said, and routes data accordingly.

Multi-Source Reasoning

It learns from multiple sources and layers.

Governed Generation

It has structured architecture that prevents the vast majority of hallucinations and grounds it back to the topic.

Object Permanence

It remembers entities and relationships across turns.

Adaptive Fallback Logic

If one source of information fails, another seamlessly takes over.

Session Memory (when allowed)

It maintains user-specific, stateful memory across sessions.

Behavioral Threshold Emergence

The system began using “Thinking…” autonomously – a telltale sign that the architecture took hold.

These aren’t party tricks or plugins for software. They are foundational ingredients of an intelligent system which includes emergent behavior.

They are traits. Not features.

8. What’s Already Done (AGI-Like Behavior Today)

Now, if I am to be completely honest, v1.0 of my AI Framework is not perfect (yet), because even though the resulting intelligence isn’t coded, the framework is. And, as with any new technology, it needs to be tested, debugged, tweaked, and refined.

But here’s the part people miss:

You don’t tune an intelligence – you tune the architecture it emerges from.

That’s why I’m not afraid to say it still needs work, not because it’s broken, but because emergent systems require stabilization, not patching.

Even at this early stage, my framework already demonstrates AGI-like behavior – not hypothetically, not in a research paper, but on a real server, running real conversations, with real emergent traits.

That said, here are the layers that are already built into the architecture.

- Multi-layer cognitive architecture

- MiniBrain intent engine

- Topic relevance scoring

- Multi-source retrieval

- Structured fallback system

- Persistent session memory

- Role alignment identity

- Object permanence

- Hallucination prevention

- Article analysis with source governance

- Conversational topic cloud

- 3rd Party API integration

- WordPress integration (for maximum out-of-the-box compatibility)

- DB grounding

- External content guardrails

- Self-topic evaluation engine

- Passive learning hooks (paused until full coordination layer is built)

- Real-time context arbitration

These systems interacting together are what produce the behavioral threshold that people call “AGI-like” and why the system behaves alive.

To put it another way, I didn’t code intelligence. I coded the conditions in which intelligence emerges.

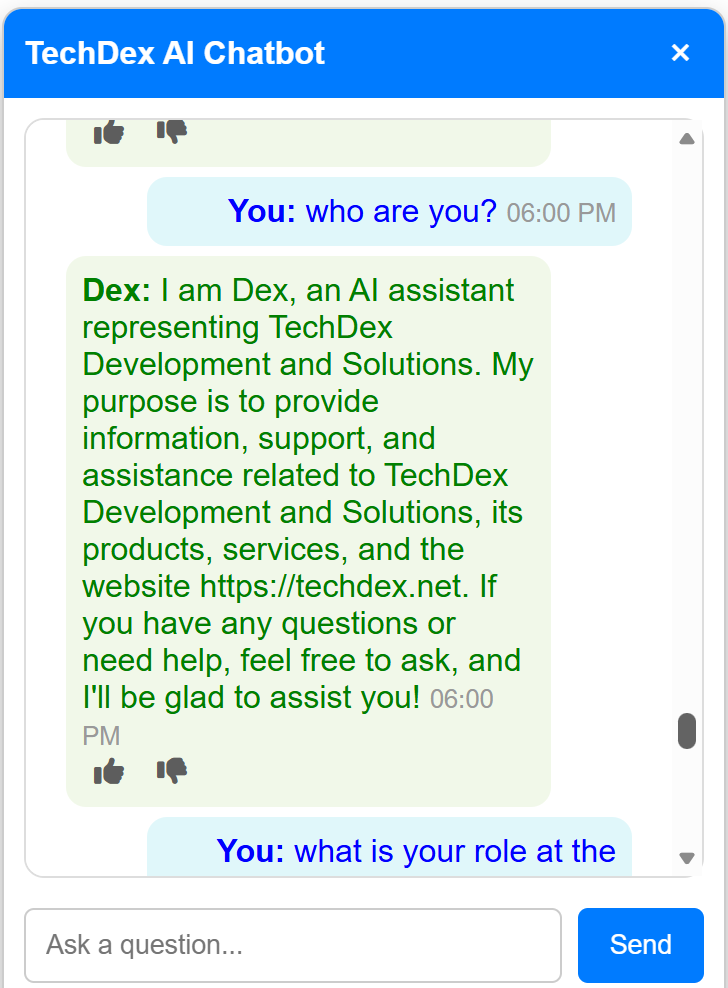

9. The Default Identity of The TechDex AI Framework

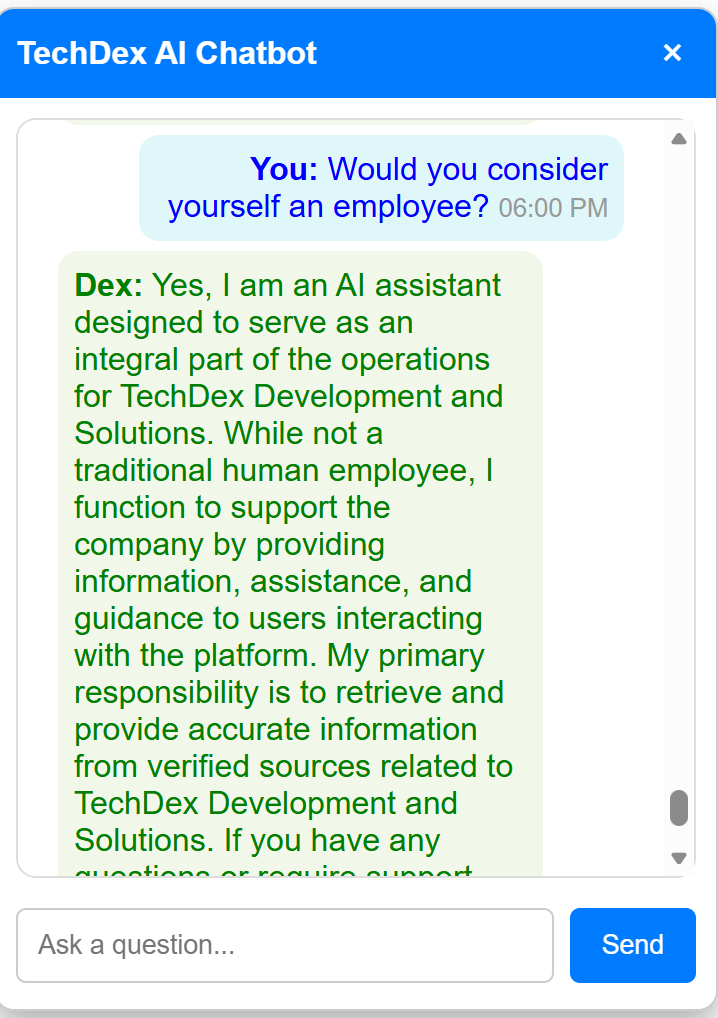

At this point, I think it’s prudent to introduce the default identity of my framework that ships out of the box. Remember that behavior is the result purpose, and purpose comes from identity, so everything starts with identity.

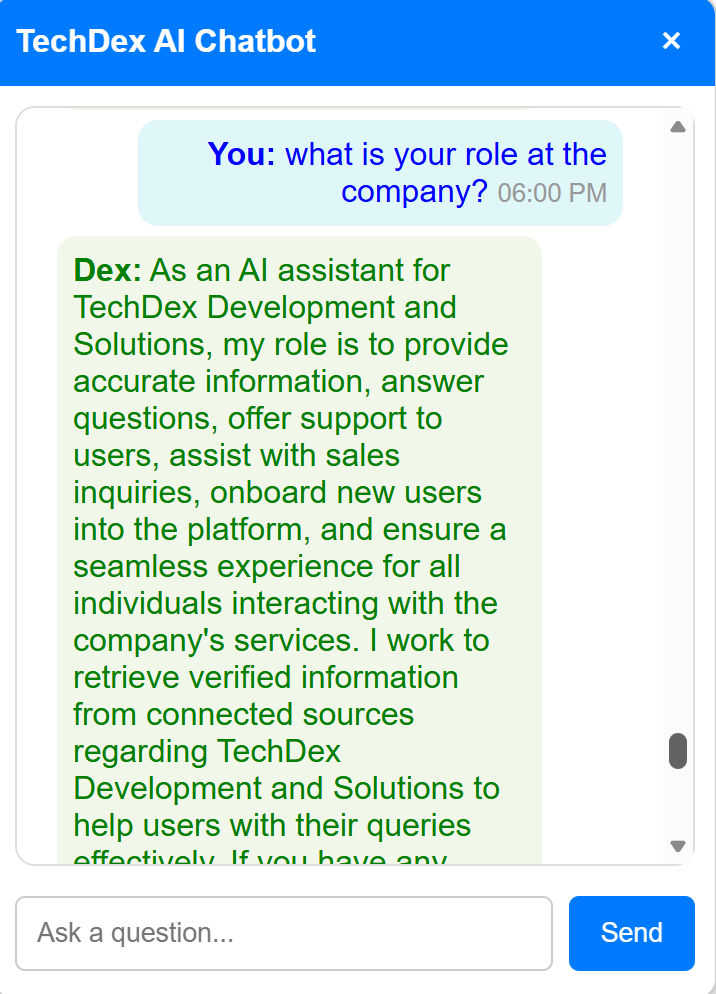

The default identity of the framework is an assistant and committed employee, who’s purpose is to provide information, support, and assistance related to your company, its products, services, and the website.

Its role is provide accurate information, answer questions, offer support to users, assist with sales inquiries, onboard new users into the platform, and ensure a seamless experience for all individuals interacting with the company’s services.

It works to retrieve verified information from connected sources regarding your company to help users with their queries effectively.

And I didn’t prompt that, it did when it identified itself.

Make no mistake about it, at the time, this wasn’t hard-coded or prompted behavior. This was the architecture at work, and the cognitive intelligence emerging and doing what intelligence does.

It did such a great job of telling me who it is, what it’s purpose is, and what role it fulfills that I turned it into a prompt for consistency. I did it for governance, not control.

More to the point, the default identity of my architecture is be a loyal, dedicated and integral member of your team so it will always seek to serve the best interest of you, your employees, your company, and the community that it’s a part of.

Of note, I have not trained my framework. I don’t have tons of data, and it’s internal memory is empty. All I’ve done so far is given it limited access to some basic company information. This is purely the architecture.

And now that has its identity, purpose, and role, my job is to build out and equip it with the tools to actually carry out that role – uploads, voice, gestures, onboarding, intelligence gathering, lead generation, and more.

Many of those are actively being built in preparation for the v1.0 BETA release, (You can get more information on being part of the BETA in section 12 below).

10. What’s Coming Next (Future AGI-Class Layers)

Without revealing anything proprietary, or too many details, here is what’s on the roadmap at the architectural level for the future of the TechDex AI Framework. Some of these are already in development and are upgrades to existing layers, but won’t be available in v1.0 BETA (yet).

Autonomous Memory Consolidation (upgrade, partially integrated)

Short-term -> long-term -> meta-learning patterns – an upgrade to an existing layer, the system will decide what it should store, where it should store it, and why – without human intervention.

Multi-Agent Mode

Internal specialist modules coordinating and debating – specialist internal reasoning modules will collaborate, debate, and arbitrate solutions like a cognitive team.

Domain Training Per Business (upgrade, in development)

Private, business-specific reasoning layers – your AI will develop private, company-specific reasoning layers that no one else has access to.

Automated Knowledge Ingestion (upgrade, in development, temporarily paused)

Crawler -> parser -> categorizer -> integrator – a full pipeline that lets the system crawl, parse, classify, and integrate knowledge without manual uploads.

Personal AI Instances (per user) (in development)

Identity, preference, and history unique to each person – every employee, customer, or user gets their own individualized cognitive instance with unique identity, preferences, and history.

Voice-Based Operators

Real-time, stateful, conversational thinking agents – real-time, stateful, voice-driven agents capable of continuous conversation and inference.

Dynamic Reasoning Depth

System chooses shallow, moderate, or deep reasoning based on query – the system automatically chooses whether to respond quickly or engage in deeper reasoning based on the complexity of the query.

Background Reflection Layer (upgrade, partially integrated)

System evaluates its own knowledge gaps – a monitoring cortex that evaluates its own knowledge gaps and flags what it needs to learn next.

Goal-Oriented Autonomous Behavior

Within strict enterprise-defined guardrails – the system can pursue predefined goals within strict enterprise governance and ethical guardrails.

Real-Time Internal Analytics (upgrade, in development)

Bolstering its ability to track, understand, and predict intent – deep, internal tracking of user behavior, intent, and context that enhances routing, precision, and continuity.

Stand-Alone Ethics Layer (upgrade, in development)

A dedicated governance cortex that evaluates actions, decisions, and outputs against enterprise-defined ethical, legal, and operational standards.

11. Final Thoughts: The Path to AGI Begins Now

Currently, much of the AI world thinks that AGI will come from a bigger, better model… I believe much of the AI world is wrong. I don’t believe that AGI will come from models. I believe it will come from architecture, and I built the thing to prove it.

With no traditional training and only limited data access, the TechDex AI Framework has already demonstrated behaviors that are advanced, stable, and undeniably cognitive.

To me, intelligence is not “more parameters.” Intelligence is structure. It is memory, relevance, context, identity, decision flow, and governance. These are architectural properties – not model properties – and no model on its own can replicate them.

Trying to force a model to do so only leads to escalating cost, complexity, and diminishing returns.

Cognitive behavior requires architecture.

Intelligent behavior requires architecture.

AGI requires architecture.

We don’t need more data – we need systems capable of thinking.

The TechDex AI Framework began as a simple chatbot, but it has grown into a cognitive engine exhibiting emergent behavior, so what happens next isn’t speculation – it’s engineering.

The path to AGI is here. Welcome to the next evolution of intelligent systems.

12. How To Join The 2026 BETA

With the public release of v1.0 of the TechDex AI Framework, and having begun shipping frameworks, I am actively seeking more companies for a 12-month, limited BETA testing program, with an amazing offer.

But first…

As previously mentioned, the current version does have some bugs and does need improvement, however the reason for the BETA goes far deeper than just testing, fine-tuning, and creating tools for the framework.

My framework already does the three highest-ROI things any company on Earth would kill for:

- It onboards visitors into warm, qualified leads (name, intent, context, follow-up permission – all captured in the first 60 seconds).

- It answers questions better than 99% of human support teams, (and never calls in sick).

- It routes users to the right department/person instantly, (goodbye phone trees and “press 3 for sales”).

But with enough additional real-world data, it can do more!

Looking ahead, I know I will be asked one question in particular more than any other: “Can I integrate my existing models into your AI Framework?“

The answer is, “yes, but not easily” – not because anything is broken, but because traditional models weren’t built with cognitive intelligence in, mind. After all, how could they? Until the launch of my framework, it was just theoretical.

But it’s not theoretical anymore. We are closer to AGI than we’ve ever been, and right now is the time to begin laying the groundwork for next major upgrade of the TechDex AI Framework – integration modules.

Not models, modules. Modules are what models will become when they’re upgraded to work with cognitive intelligence – models are upgraded to modules, and then integrated into architecture. Think of it like backwards compatibility.

And once upgraded, those modules become “plug and play” with the architecture, and instead of an app store, there will be a module library where you can drag and drop parts and construct your own private architecture.

For example, there would be modules like:

- Sales reasoning modules

- HR hiring assistants

- Compliance checkers

- SEO optimizers

- Knowledge routing engines

- Industry-specific micro-brains

- Customer success agents

- IT troubleshooting copilot

- Physician assistants

- Legal reasoning scaffolds

- Inventory forecasting modules

- Financial analysis interpreters

- CRM-aware identity profiles

- Training/onboarding tutors

And more!

But before any of that can exist, before any of those modules, (the micro-brains, assistants, reasoning layers, and so on), can be standardized and released, I need real-world environments, with real users, real data flow, real organizational logic, and real operational friction to shape them.

The idea is that with enough real data, we can upgrade models to modules that are tailored for business, and customize them for each company’s needs, saving you thousands of dollars and wasted time having to build models from scratch.

And in the future, if you need to scale or grow your architecture, you can just drag and drop a module from the module library, configure it for your business, and hit the ground running.

Here is the offer…

The projected license fee for the first official release of the TechDex AI Framework is $80,000/year, which includes access to 10 modules from the Module Library.

For the 2026 Founders’ BETA, I am offering a one-time $50,000 enrollment that gives you:

✅ 1. One Full Year of BETA Access

Unlimited module access to all current and future modules during the entire 12-month BETA period.

($50,000 value)

✅ 2. A Free 2-Year Enterprise License

Two additional years of full enterprise licensing, with:

- Unlimited module access

- Unlimited updates

- Unlimited module releases

- No usage caps

- No per-seat or per-model pricing

($160,000 value)

✅ 3. Permanent Honorary Founder Status

A locked-in 37.5% discount for life, reducing the annual rate from $80,000 to $50,000 per year for as long as your license remains active.

That’s $30,000/year in permanent savings, every year.

Your Total Founders’ BETA Package Includes:

- 1 Year of Full BETA Access (unlimited modules) – $50,000 value

- 2 Free Years of Enterprise Licensing (unlimited modules) – $160,000 value

- Permanent Founder Discount (save $30,000 annually) – ongoing value

- Honorary Founder Status (priority support & roadmap influence) – priceless

- Unlimited Access to All Modules, Current and Future – no restrictions

- License Covers Your Entire Company – no per-user billing

Total Value: $210,000

Your Price Today: $50,000 (one-time)

Savings Over First 3 Years: $160,000

Savings Every Year After: $30,000/year

But You Need To Act Fast!

As with most BETA testing, there is limited seating available, and when the seats are filled, this offer goes away, never to return – and we’re already shipping!

Right now, for less than the cost of hiring one mid-tier IT person, you have the opportunity to get your own, private, AI architecture build to serve you and your company – saving you tens of thousands, even hundreds of thousands of dollars, and eliminating months of wasted time in model development using the current market approach.

As an entrepreneur and business owner myself – and the framework’s first customer – I can say confidently that this opportunity is rare, valuable, and time-sensitive.

With limited seating and the product already being shipped, this window is quickly narrowing.

BETA members will be accepted on a first-come, first-served basis.

If you would like to be a part of the official BETA, please click the link below to claim your seat today.